Ingest Excel files into the Data Lake

Intermediate | 45 Minutes

Enterprise Integration Data Fabric

Overview

While most of the transactional data might come from Infor applications or 3rd party applications from public/hybrid cloud, there are still systems that generate data in Excel formats. This format is great for human consumption but is generally not ideal for systems to read and process.

Being able to schedule and automate the import of Excel files into the Infor Data Lake can help complement your enterprise data and allow you to operationalize custom data models to unlock valuable insights.

📋 Requirements

|

Tutorial

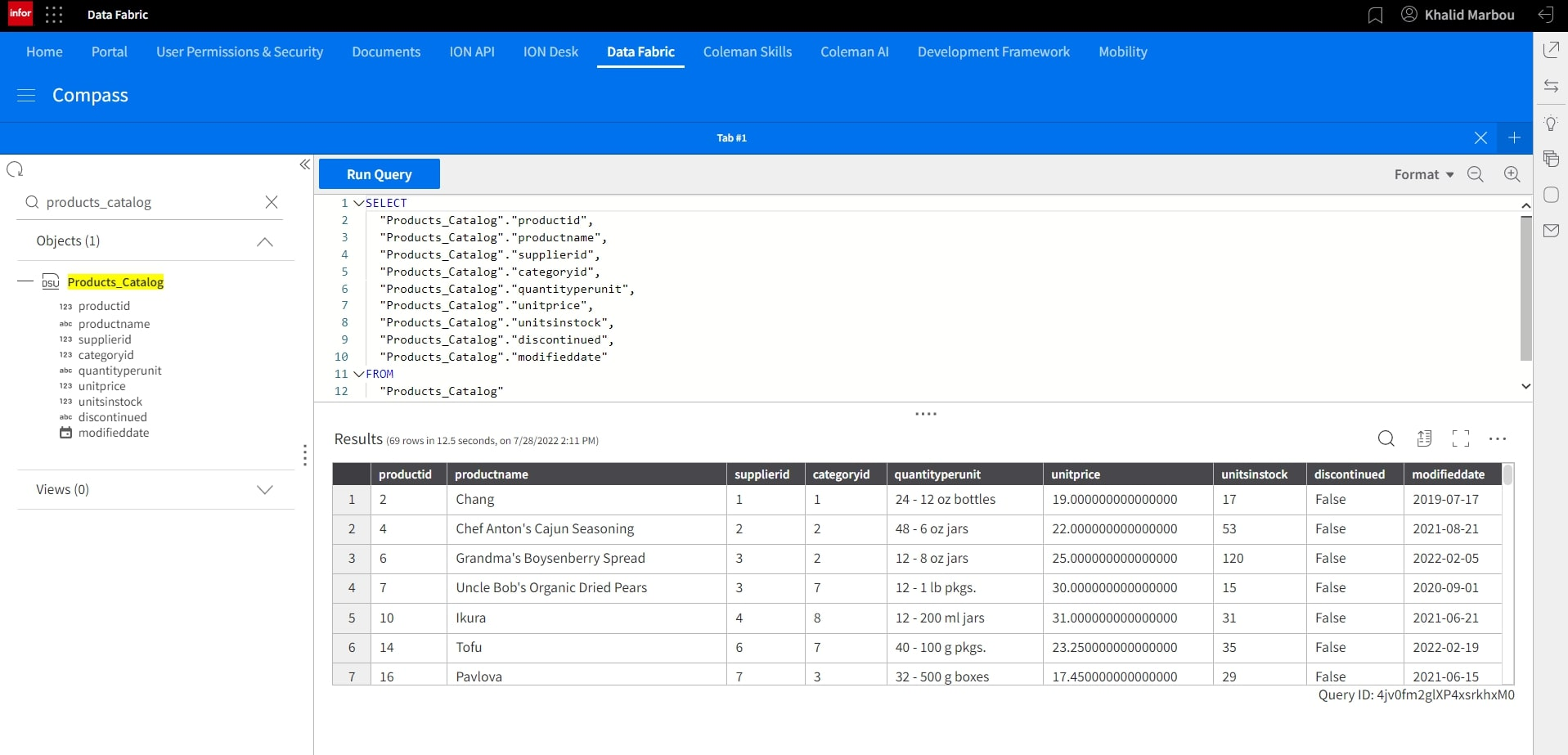

The Infor Data Lake uses object storage, making it capable of storing files, or “data objects”, of virtually any data format. Data objects are eligible to be queried only if they conform to one of the recognized formats, which include NDJSON and DSV(CSV, TSV, PSV, or user-defined).

This tutorial walks through the process of automating ingesting Excel files through the ION sFTP File Connector, leverage the ION Scripting capabilities to automatically convert the file format from Excel to CSV, ingest the resulting object into Data Lake, and finally use it to build a comprehensive business domain model using Metagraph.

What made this section unhelpful for you?

On this page

- Ingest Excel files into the Data Lake